This is a continuation of my previous blog post. The goal is to sum up some lessons that I've learned during the last couple of months while I was involved in performance tuning a large-scale distributed web-app.

Golden Rules

Although there are probably many more rules and good advice out there, these are the ones that I remember off the top of my head as being important:

Change one thing at a time

I often found myself very tempted to violate this rule. The problem with breaking it, however, is easily illustrated with an example: Imagine that you make two changes, c1 and c2, at the same time. If c1 results in a performance improvement of 20% and c2 in a performance penalty of 30% you'll get an overall performance deterioration of 10%. Consequently, you'll decide not to implement any of the changes, even though c1 on its own would have resulted in better performance.

Look at the system as a whole and fix the slowest running part

Even if you can make some part of the system thousands of times faster, it will not affect you application performance if the part you changed was not your primary bottleneck. For example, it doesn't make sense to optimise application code if the bottleneck is the result of a slow running database query. I'd even go as far as saying that it is harmful to optimise parts of the systems, when it's not needed. Firstly, it's a waste of time that could be used for tasks that provide more value. Secondly, making performance optimisations often introduces additional complexity at the code level. If you can't justify this extra complexity with a significant performance boost, don't do it. Of course, I'm not advocating against common sense and sound software design principles. For example, I know that making lots of fine-grained RPC calls is a bad idea, so I'll avoid it in the first place.

Don't optimise prematuerly, i.e. without measuring

This one probably goes hand in hand with the rule above. Don't optimise unless you can prove that it will have an effect on overall system performance. Again, this rule is not an excuse for not using sound software design principles.

Performance Testing Cycle

Keeping the above rules in mind, we continuously iterated through the following cycle:

- Measure performance

- Identify single bottleneck (i.e. pick lowest hanging fruit)

- Fix single bottleneck

- Verify performance has improved

Once step 4 is complete, the cycle restarts. Sometimes, we would loop through this cycle several times a day. Other times, one loop would take us several days or even weeks. This process essentially continued until our release target was reached.

Measuring Performance

We used JMeter to generate load against the application under test. We set up the tests so that the generated load would increase over time and therefore put the application increasingly under more stress. While running the tests, we measured a number of parameters. The most important ones were throughput, average response time and CPU utilisation.

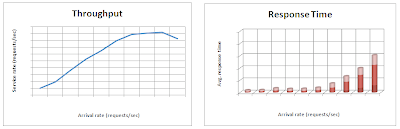

Looking at charts similar to the ones shown below, we got a fairly good understanding of how much load the application under test could handle.

Looking at charts similar to the ones shown below, we got a fairly good understanding of how much load the application under test could handle.

In the above charts, for example, you can see that, at some point, application throughput reaches a plateau while the average response time per transactions continuous to grow. At this point, the application reached some physical or logical limit that prevented it from doing more work. The challenge, of course, is to find out what those constraints are in order to increase throughput or reduce response times.

Identifying Bottlenecks

Bottlenecks created by hardware constraints are normally quite easy to identify. Usually, the symptoms are maximum CPU utilisation, reaching network bandwidth limits, etc. The solution is often to change and restructure application code. Identifying bottlenecks not directly created by hardware constraints is more difficult. Likely causes are slow running external systems, resource starvation, suboptimal configuration settings, etc.

In the last project, we eliminated the hypothesis that slow running external systems are constraining our system quite early by taking them out of the equation completely and using stub implementations instead. At the same time, this made our performance tests much more robust, reliable and faster.

In the last project, we eliminated the hypothesis that slow running external systems are constraining our system quite early by taking them out of the equation completely and using stub implementations instead. At the same time, this made our performance tests much more robust, reliable and faster.

Fixing Bottlenecks

In the first few weeks of our performance tuning initiative, we made quite a lot of progress. There were a large number of easily identifiable bottlenecks which were relatively trivial to fix. These included simple programming errors, unnecessary database calls, unnecessary network calls, slow running SQL queries, no caching where data was easily cacheable, concurrency issues, etc.

After some time, however, it started to get more difficult to identify bottlenecks. In particular, there has been one case that I think is worth writing about.

Garbage Collection

We had already spent several weeks trying to identify a bottleneck, which was not obviously caused by hardware constraints. Here are the things we noticed:

After some time, however, it started to get more difficult to identify bottlenecks. In particular, there has been one case that I think is worth writing about.

Garbage Collection

We had already spent several weeks trying to identify a bottleneck, which was not obviously caused by hardware constraints. Here are the things we noticed:

- Throughput reached a plateau at point t

- Response time grew significantly at the same point t

- Hardware was far from being exhausted. CPU utilisation, for example, was about 60% at point t

- Although total CPU utilisation was around 60%, one (of eight) cores was maxing out occasionally

The last point was indicative that there was probably a CPU-intensive task executing in a single thread, hence single core. One such task that we could think of was garbage collection. We verified this using Perfmon and found that GC was indeed taking up a large amount of processing time (up to 30%).

As a result, we did some reading on how .NET GC works. We've learned that, by default, the GC is optimised for standalone apps running on single-core machines (called Workstation GC). On multiprocessor machines, however, there is an additional GC mode available (called Server GC). The difference between the two is basically that the latter creates a separate GC heap and GC thread for each processor and that collection occurs in parallel. Here's the change we made to our configuration:

After making the above configuration change, the throughput of our application increased by almost a factor 3! At the same time, we were again reaching 100% CPU utilisation and average GC time was down to 2-3%.

Of course, this dramatic change meant that we were dealing with a completely new application profile. Consequently, we restarted our iterative cycle described above again from beginning in order to find the next bottleneck.

As a result, we did some reading on how .NET GC works. We've learned that, by default, the GC is optimised for standalone apps running on single-core machines (called Workstation GC). On multiprocessor machines, however, there is an additional GC mode available (called Server GC). The difference between the two is basically that the latter creates a separate GC heap and GC thread for each processor and that collection occurs in parallel. Here's the change we made to our configuration:

<configuration>

<runtime>

<gcServer enabled="true" />

</runtime>

</configuration>

After making the above configuration change, the throughput of our application increased by almost a factor 3! At the same time, we were again reaching 100% CPU utilisation and average GC time was down to 2-3%.

Of course, this dramatic change meant that we were dealing with a completely new application profile. Consequently, we restarted our iterative cycle described above again from beginning in order to find the next bottleneck.

Conclusion

The fundamental prerequisite for doing effective performance tuning is to have a set of repeatable and reliable performance tests. Ideally, these tests are easy to execute, finish in a reasonable amount of time and give you rapid feedback with regards to how the application is performing. Also, you'll need an isolated environment, which allows you to deploy new versions of the application easily and frequently. This gives you a good platform to experiment with changes. Measuring the difference between these changes with respect to the overall application performance then gives you the ability to make informed choices.